Reproducing the uniquely human way of seeing things in a virtual world

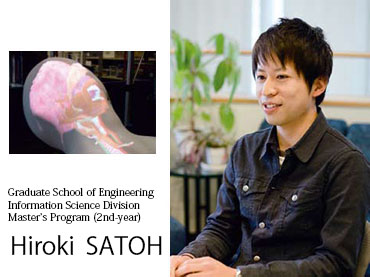

Developing a more realistic anatomical model by projecting computer graphics onto a simulated torso

During my years as a student, I began research into virtual reality (VR※1). Of course, I was interested in the physical world as well, but this was at the time when computer performance had started to really improve. Thinking that the power of computers might allow virtual spaces and objects to be produced more realistically in the near future, I undertook a thorough investigation of the possibilities.

Around 1998, I had the idea of placing a projector on a person's head using it to project images. Goggle-type displays cut off the outside world and allow you to immerse yourself in a virtual experience, but as soon as you start to walk around in physical space, you bump into things and are jerked back into reality. I wondered if this shortcoming could be addressed by placing a projector on one's head so that you could see virtual images superimposed on top of actual objects in the direction you faced.

Around 1998, I had the idea of placing a projector on a person's head using it to project images. Goggle-type displays cut off the outside world and allow you to immerse yourself in a virtual experience, but as soon as you start to walk around in physical space, you bump into things and are jerked back into reality. I wondered if this shortcoming could be addressed by placing a projector on one's head so that you could see virtual images superimposed on top of actual objects in the direction you faced.

However, in order to place a projector on one's head, you must first get past a variety of problems such as the need to reduce the weight of the projector. Next, I had the idea of projecting a virtual image onto a specific object. This leads me to conduct joint research into virtual anatomical models (VAMs2) for use teaching medical students with Professor Yuzo Takahashi of the School of Medicine, who has since retired. By projecting computer graphics depicting internal organs onto a simulated torso3, we were able to give the structures a more realistic appearance, making the model more believable. We succeeded in reproducing human anatomy in a way that let students see bones and organs three-dimensionally as if the skin were transparent, complete with realistic movements.

Reproducing the uniquely human way of seeing things with computer graphics and sensors

looking with one eye through the hole

in it, you can see more three-dimensional

computer graphics.

In this design, a sensor detects the

position of the person viewing the

device so that a suitable image can be

shown.

In fact, it is extremely difficult to project a virtual image onto an actual object so that it appears to be both accurate and realistic. This difficulty arises from the fact that human beings see objects through an "image experience" constructed in the brain. For example, when viewing an object with two eyes, you do so while unconsciously sensing how your body is moving. In short, you see the object that you want to see while naturally combining information about your own movements and orientation with sensory input from your eyes. This is known as self-motion vision. By contrast, the process of ascertaining the shape of a three-dimensional object by viewing it as you move it is known as object-motion vision. We created our VAM by incorporating these two types of vision. When you flip over the torso, sensors detect that motion, and organs on the other side of the spine are shown. Similarly, if the viewer moves forward, backward, left, or right, the top or sides of organs are shown, making the experience more realistic.

Last academic year, I focused on developing technology related to the control of the model, for example by using a handheld laser projector to make it possible to view organs that are located deeper in the body. I am also conducting research to project images from multiple directions using a model of a human head with two or three projectors.

The difficult thing about this research is that it requires a high level of familiarity with how computer graphics are shown and seen--in short, of the characteristics of human perception and methods of graphical rendering. For example, it is more difficult to display computer graphics on a head, which is spherical in shape, than on a flat body. More research is needed in order to understand these challenges.

Need for more people to think about content for commercialization in medicine and industry

The reasons for which I first got involved with medical VAMs are twofold: first, because the subject matter itself was complicated, and second, because I wanted to create something that would be challenging. However, in the actual practice of medicine, it's even more difficult to educate hospital staff working in areas such as nursing and nursing care. These workers must master an extensive range of knowledge in a short period of time, and the educational methods used must be simpler than those utilized by medical schools. One definitive difference is that it is not possible to give them an experience of how autopsies are conducted. I think VAMs are extremely promising as an alternative to such training.

I am currently conducting joint research with Chubu Gakuin University into a VAM for use in teaching nursing home staff how to prevent aspiration. We believe that creating a VAM for use in studying jaw reflexes and muscle structure and mechanisms will lead to more practical education. Medical education has reached the stage of introducing simulation-based education into the curriculum. I am confident that the day will come that VAMs are used in a variety of settings, including as peripheral equipment for emergency medicine and surgery simulators.

I am also conducting research into commercializing VAMs in other fields. Shintec Hozumi in Ogaki is currently developing a type of experiential three-dimensional content known as Vrem4using our VAM technologies as part of a joint development project. We are promoting this technology, which makes it possible to create content that can be viewed three-dimensionally from various angles by changing the viewpoint and moving the model, to companies such as automakers and homebuilders.

Right now, it is difficult to commercialize VAM technology due to a lack of content creators. For instance, in medical education, we need people to think about not only computer graphics depicting organs, but also how those graphics can be incorporated into the actual practice of medicine. In industry, we need people to think about how computer graphics depicting engines can be put to use in the design process. It's difficult to start commercializing products solely by transferring technology; I think we need an intermediate business entity that will create consensus around a project.

There are various issues to be overcome, but I look forward to continuing to work toward the commercialization of this technology in various industries.

- 1VR, or virtual reality: Technology for artificially creating a sense of reality concerning space, objects, and time by using computer graphics, sound, and other elements to trigger the human senses of sight and hearing.

- 2VAM, or virtual anatomical model: A virtual dissection model.

- 3Simulated torso: A model of the human torso.

- 4Vrem, or virtual reality embedded model: Experiential three-dimensional content projected as a three-dimensional video based on the viewer's viewpoint and the model's position; a product jointly developed with Shintec Hozumi.

We spoke to Associate Professor Kijima about the wonders of virtual reality.

Q. What is the current state of the art in three-dimensional technology?

A. Three-dimensional images exploded in popularity around the time of the International Exposition in 1985 (the Tsukuba Expo), and their popularity has been increasing again in recent years. Projection mapping, a technique that projects computer graphics onto objects such as buildings, is also attracting attention. The projection mapping staged when Tokyo Station was renovated generated a lot of buzz. One toy manufacturer has developed a popular toy that lets users restage the projection mapping onto a miniature Tokyo Station drawn in a small box by downloading an app onto their smartphone and placing it in the box to project the images. By creating overlap between the physical and virtual spaces, projection mapping exerts a wondrous appeal that excites viewers.

Q. How do people's eyes see objects?

A. The human eye is often compared to a camera, but that is inaccurate. "Image experiences" are created in the brain based on people's memories and experiences seeing things, and those image experiences enable them to see things. In short, we only see that with which we are familiar and that which we can see. By contrast, we cannot see that which we have never encountered before or that which is strange or unknown to us.

Q. How are we able to see three-dimensional images and video?

A. Our ability to view three-dimensional images and video relies on a mechanism of the human eye known as binocular parallax. Because the left and right eyes supply slightly different information to the brain, we are able to perceive depth and breadth. For example, imagine that we draw a picture, some of it with a red pen and some with a blue pen. When we view the picture through red cellophane, we will only be able to see the blue portion, and when we view it through blue cellophane, we will only be able to see the red portion. In this way, we can create a three-dimensional effect. There are many researchers investigating stereoscopic vision but few focusing on motion vision, as I do.

Students supporting research

Experiencing the potential and future promise of this technology, which has uses in medical education and industry

Currently, I am conducting research into a three-dimensional display system for uniformly projecting computer graphics onto an object such as a simulated torso using two or three projectors. I am paying particular attention to brightness in an effort to increase the quality of the images. Objects such as simulated torsos have curved surfaces, and the varying angles of the light create bright and dark areas. Because the human eye naturally compensates so that dark areas are perceived as being brighter than they actually are, there is a difference between the brightness on the screen and the brightness perceived by human viewers. I struggled with this difference at first, but now I find the question of how to overcome the difference and create uniform brightness an interesting one.

Additionally, I am currently working with Chubu Gakuin University to develop VR for reproducing the mechanism of swallowing using a model of the human head. Next, I would like to reproduce the texture of human skin with a material such as silicone, develop a mechanism for making the model move, and link that movement with the computer graphics depicting swallowing. Projects such as these give me a renewed sense of the potential and future promise of this technology, which has uses in medical education and industry.

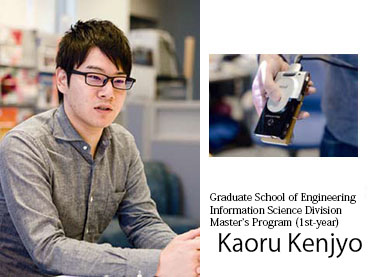

Researching visual effects with a handheld projector to enable the user to choose which portion of a model to view

I am currently conducting research into new methods for controlling the projection of computer graphics from freely chosen positions using a handheld projector in a way that takes into account the user experience. Specifically, I am working to verify how visual effects can be applied to the portion of an image shown with a freely movable handheld projector when projecting computer graphics using one fixed projector and one handheld projector. For example, with a VAM, the handheld projector is used to project graphics of organs with a greater sense of depth. Those graphics are surrounded with a black border to create an effect that makes them stand out. Research into this type of VR system remains rare, and I am motivated when large numbers of attendees show their interest at exhibitions and tradeshows.

I am hopeful that one day, this system will enable handheld projectors to provide a detailed view of an interesting portion of a large projection mapping display.